Building a social understanding of AI.

Helen Margetts is optimistic about the relationship between technology and society.

The Professor of Society and the Internet at the Oxford Internet Institute (OII) began an early first career in computing on mainframe computers that spoke COBOL, a computer language.

With each successive generation of digital technology, including the most recent one of data-powered technologies such as AI, she has seen – and researched - the positive side of how these technologies might help government, society and democracy.

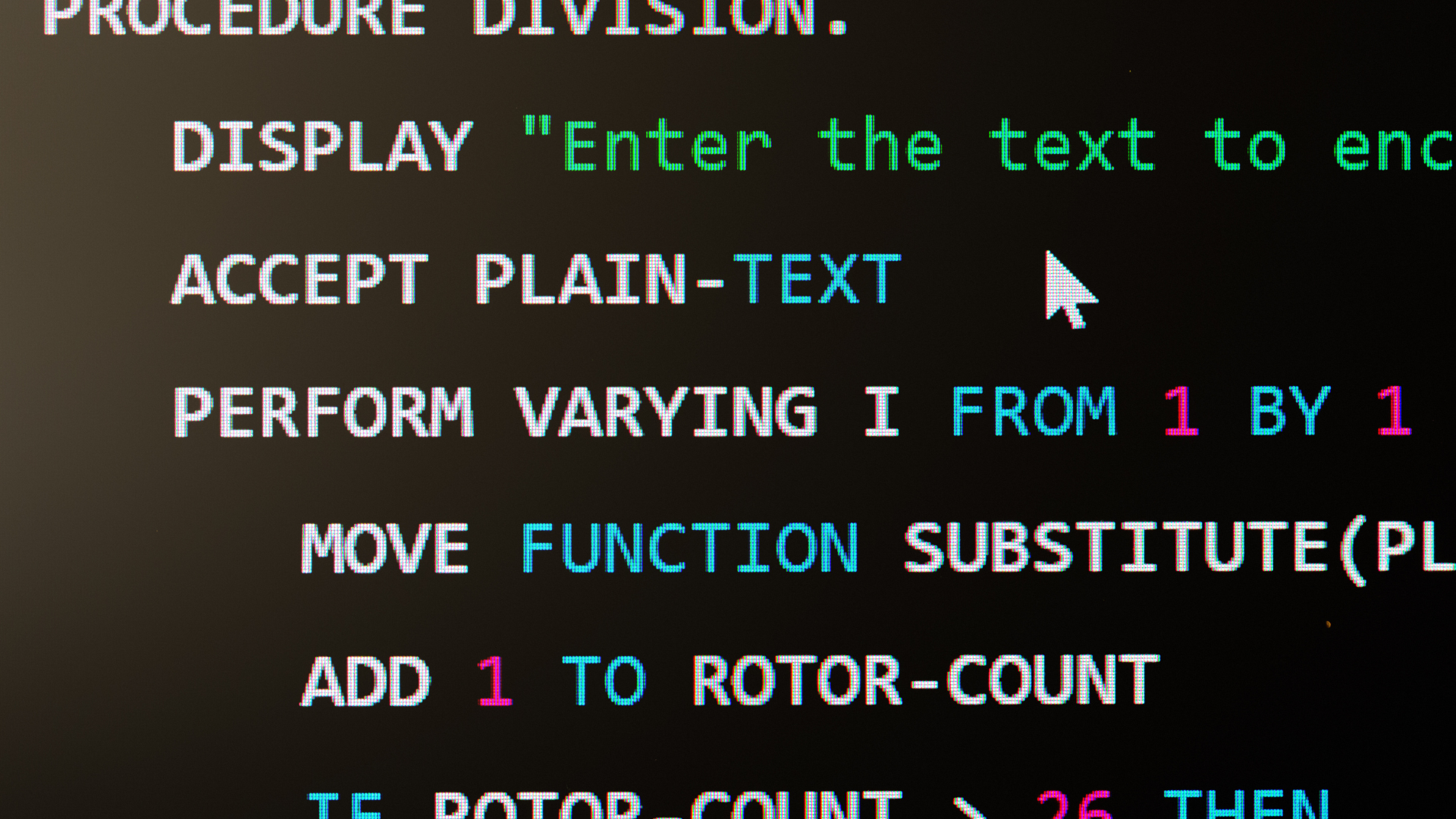

An example of COBOL

An example of COBOL

Professor Margetts takes the long view of the impact of AI.

Indeed, five years ago she set up the Turing Public Policy Programme to help government maximise the possible benefits of these data-powered technologies.

Her team of researchers, now 55 strong, also focus on how to innovate with AI ethically and responsibly, building frameworks that embed principles of fairness, accountability and transparency into AI development and deployment.

Although this work looks at opportunities and the well documented risks of AI, she takes a dark view of the current focus in some quarters, including Silicon Valley and UK political leaders on ‘existential risk’ from AI.

She rolls her eyes, ‘Exponents of these views tend to concentrate on possible risks to generations far into the future.’

‘It is important to research long term risks, technological innovations should always be accompanied by foresight and horizon scanning and the UK as a country has been good at that.’

‘However, there are plenty of immediate risks from AI, which we need to guard against.’

She continues. ‘We need frameworks for ethical and responsible innovation which are based on agreed principles, we need regulatory responses to keep today’s citizens safe, and more generally become more successful at integrating technology into society.’

‘I think Alan Turing had it right, when he said ‘We can only see a short distance ahead, but we can see plenty there that needs to be done’.’

One of the key risks from the latest generation of AI, she admits, is the potential to turbocharge online harms.

‘The commercialised interfaces for Large Language Models (LLMs) for example, could lead to a tsunami of pernicious misinformation or conspiracy theories, or targeted abuse and highly successful financial fraud. We need to be researching that.’

‘We need technical expertise to keep ahead of these developments and to design counter-interventions.’

‘And we need to build social understanding of how citizens experience AI; what methodologies allow us to understand their hopes, concerns, fears about AI – and feed them into how we deploy and develop these technologies.’

‘We need to understand how digital inequalities feed into equality per se – how these technologies can build feedback loops between citizens and governments to improve government.’

‘How different people are affected by different applications – what personalities tend to be attracted to conspiratorial thinking, for example?’

‘All that requires understanding from social science disciplines, political science, economics, psychology, sociology and so on.’

Professor Margetts worries, there is a tendency to blame technology for the things that they reveal about society.

‘Biased operation of AI, when used in recruitment or loan applications or criminal justice, for example, is a very real and present danger of using AI in decision making. But these biases often reflect – and worse, reinforce - age-old human bias.’

She adds, ‘But we're all biased. We find it really difficult not to be biased.’

‘Anybody who has done unconscious bias training knows you are always having surprises about the extent to which you are biased, however much you do not mean to be.’

But what about the mistakes made by AI? Border control gates, or medical devices that have been proved to discriminate on grounds of ethnicity? Professor Margetts continues,

‘Bias can come from multiple sources. For example, it can come from machine learning algorithms being trained on data relating to human decisions from the past, which were biased, or from data embedded with correlations resulting from multiple biases experienced by certain groups.’

Professor Margetts discusses how AI could actually help to expose some of the biases in our democratic institutions.

‘Bias can also come from the designers and developers of AI, who tend to come from a limited demographic group and think in a certain way’ Professor Margetts explains.

‘Both these biases were a problem in the early days of facial recognition technology, that was developed by and trained on people who worked in Silicon Valley, largely white men.’

But, she says, ‘So traditionally have been many of the people on hiring panels – or the professions of criminal justice systems, such as judges or police.’

‘The point is that the bias often originates from longstanding biases or divisions in society. Loans tend to be denied to people on precarious incomes – and perhaps those people are more likely to be from ethnic minorities – AI makes that link, even though it is not causal.’

A report, co-authored by Professor Margetts on the feelings about AI in the UK population

Ever the optimist, she continues, ‘The advantage of data-powered technologies such as AI is that the data makes these biases explicit, sometimes for the first time. Recognising that is a step towards being able to mitigate the bias.’

‘We might end up with organisations and decision-making processes that are fairer than they have ever been before.’

Professor Margetts is a social scientist with a Maths BSc, a Politics MSc and a PhD in government from the London School of Economics and Political Science (LSE).

She has a long track record of research that focuses on the relationship between computers, government and democracy, most recently as the Director (2011-2018) of the Oxford Internet Institute, a multi-disciplinary department of the University, and currently as Programme Director for Public Policy at the Alan Turing Institute, the national institute for Data Science and AI.

She was an early exponent of the ‘portfolio career’.

After her undergraduate degree in Maths at the University of Bristol (one of few women in her year) with a dissertation in the Philosophy of Mathematics, she became a graduate trainee in computer science for Rank Xerox.

Technology was moving fast – even if the massive mainframe computers on which she learnt to programme lingered on in government into the 1980s and beyond.

People who understood computers were highly valued.

Professor Margetts took Maths, Physics and Chemistry at A level. But she also had a keen interest in English, Philosophy and Politics.

After a few years in computing in the private sector, she used a ‘loyalty payment’ from when her company moved from central London to Hangar Lane to fund Masters study in Politics at LSE.

‘I wanted to write essays,’ she recalls simply. ‘When I was at school, there had been quite a lot of pressure to do something ‘useful’.

‘Also, I was the first from my (girls’) school to read Maths at university, and the head mistress had said I would not be able to do it. So. of course, both my mother and I were determined that I should.’

At LSE, though, she combined her interest in politics and government and expertise in mathematics and technology.

After completing her Masters, she took a part-time doctorate, which investigated computers in central government in Britain and America, while working full-time as a researcher with the Political Science Professor Patrick Dunleavy at LSE.

Her PhD about computer systems in government in Britain and America showed the extent to which both governments had struggled to maximise the potential of these technologies to improve public policy making or public services.

From the LSE, Professor Margetts went to London University’s Birkbeck College as a politics lecturer, creating the MSc in Public Policy and Management, and then to University College London to become the first professor of political science, soon becoming Director of the School of Public Policy.

University College London

University College London

From there, some 17 years ago, she came to Oxford, to take up the Chair in Society and the Internet at the recently founded Oxford Internet Institute.

The Department was set up in 2001 as a multi-disciplinary department to research and study the relationship between technology and society.

‘I missed London,’ she says in a typically honest aside. ‘But I thought Oxford was a nicer place for my son to grow up'.

And she has stayed, partly because – as her X/Twitter feed demonstrates – it is a nice place to live and walk the dog, particularly on misty Oxford mornings on the banks of the river.

Wet, wet, wet. pic.twitter.com/dw7e7MEpUx

— Helen Margetts (@HelenMargetts) October 27, 2023

But also because she found that her vision of research at the disciplinary boundary could be realised at the OII, where disciplines are represented right across the social sciences and across all four Divisions of the University.

She enjoyed the dynamic academic atmosphere and is proud of the OII’s research.

‘I believe passionately that social science should be there in the design, the development and deployment of all technology, but particularly AI, that social science questions and insight should be informing, research and development’

‘Of course, there are many multi-faculty universities, but I think the kind of balance you get in Oxford is wonderful,’ adds Professor Margetts.

As a political scientist, she is particularly interested in the possible impacts of the digital world on democracy and is currently writing a book about it, inspired by the many current works predicting the end of democracy.

She says, ‘There have been many books about democratic backsliding or even the end of democracy, where technology is implicated. First it was the internet, then it was social media, now it is AI.

Fireside Chat with Stuart Russell and Helen Margetts: Living with Artificial Intelligence

‘The focus is on the negative impacts of these technologies, while failing to lay out the possible positive developments, and how these technologies might even improve democracy.’

‘For example, you can argue that social media have fuelled collective action and mobilisation around policy issues all over the world, allowing people with no more resources than a mobile phone to fight against injustice.’

With decades’ experience and a clear understanding of both the benefits and limits of technology, Professor Margetts concludes, ‘I am optimistic that getting the technology right could help us to shape a period of democratic regeneration.'

‘If you think about it, democracy is a radical, redistributive idea, with its defining characteristics of popular control and political equality. In the age of AI, we should nurture that aspiration.’

More #OxfordAI experts